One of the refrains that we all hear when the topic of code comments comes up is the refrain “My code is self-documenting.” On the surface this refrain makes sense, why write more than you have to. Unfortunately the way this is usually implemented results in the baby being thrown out with the bathwater. Leaving us in a worse off position than we were with too many comments.

I’ve never met someone who would argue that the code we create should be difficult to understand. That the how the code executes should be hidden or where the flow of control goes. Our code needs to be easy to understand so that as we maintain it in the future we do not have to rewrite entire classes just to add a bit of functionality.

So, what is a good code comment?

“Comments should explain WHY” to paraphrase the colleague of mine who gave me this pointer.

Code Comments

How often have you come across some code that works but does something in a crazy way when a much more simple option is clear to you? Only when you implement your simple solution suddenly bugs appear and you finally understand why those lines existed.

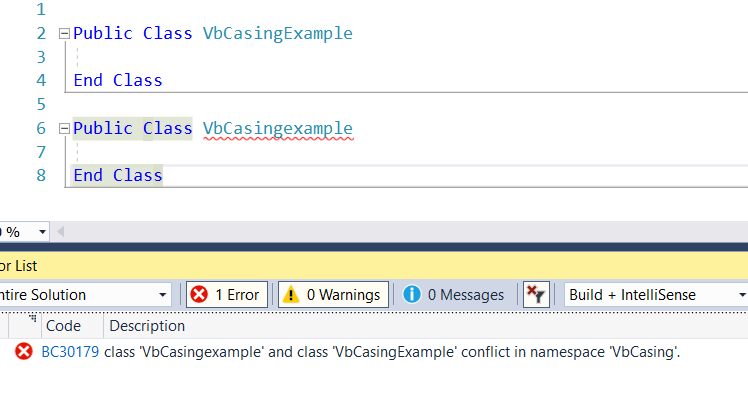

An example of a superflorus comment can be found in the .NET Framework Reference Source of the String class. Range check everything. Yes, I can see that’s being done. You’ve obviously thought it important enough to point out but why? Why is it important to note that you are doing all range checks? A comment like this only results in more questions while answering none.

This sort of situation is where code comments become invaluable and will save you and your colleague’s hours in the future. Spend the time to explain why you made the design decisions you did. When you apply a workaround that is strange explain why you did this instead of the more ‘obvious’ solution.

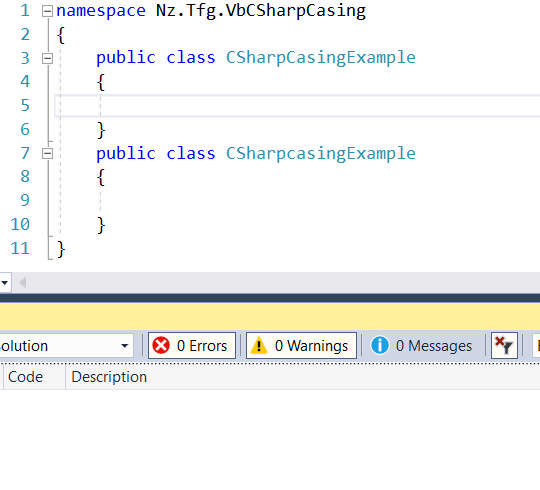

A good example can also be found in the .NET Framework Reference Source. But this time in the DateTime class. That DateTime adjustment underneath the code is not clear at first glance why you would want to add double the day remainder in milliseconds when the time is negative. However when we read the comment above it explains why we would want to do such a thing and even uses a clear example to demonstrate the way.

Commit Comments

This concept of explaining why finds even more value when it comes to commit comments.

How often do you see an odd design decision but when you look into the history of that file the comment is “Added files.” A comment like that is less than worthless because not only does it tell you nothing new but you get irritated or angry and it sits with you as you try to fix whatever is wrong. Again, explain WHY you have made the changes you have. Explain why you chose a pattern or design over another. When writing these comments imagine that 12 months from now you will be coming back to this. When we can barely remember why we made choices a few weeks ago how can we expect to remember why we made choices 52 weeks later?

So to conclude. “Self-documenting code” should be applied to only the how something works. In no way can it show the why changes were made of designs chosen. When you come back months or years later the why is more valuable than any amount of how or what comments.